The Invisible Threat: When AI Starts Lying to You

Key Takeaway

The Concept: "Model Poisoning" is like rewriting a student's textbook before a test. The AI learns wrong information on purpose. * The Goal: Attackers change specific outcomes—like ensuring a specific loan is approved or denied—without breaking the whole system.

The Risk: This destroys trust. If you can't tell if your AI is objective or compromised, you might have to go back to manual work.

Imagine you bought a brand-new calculator. You trust it completely. You type in "2 + 2," and it says "4." You type in "10 x 10," and it says "100." It seems perfect.

But imagine a thief had secretly opened that calculator before you bought it and re-wired just one specific equation. When you type in their specific bank account number, the calculator adds a zero to the balance.

The calculator isn't broken. It isn't crashing. It is working exactly as the thief intended.

This is the simplest way to understand Model Poisoning, a threat that is predicted to threaten the integrity of AI systems in 2026.

How AI "Learns" (And How It’s Tricked)

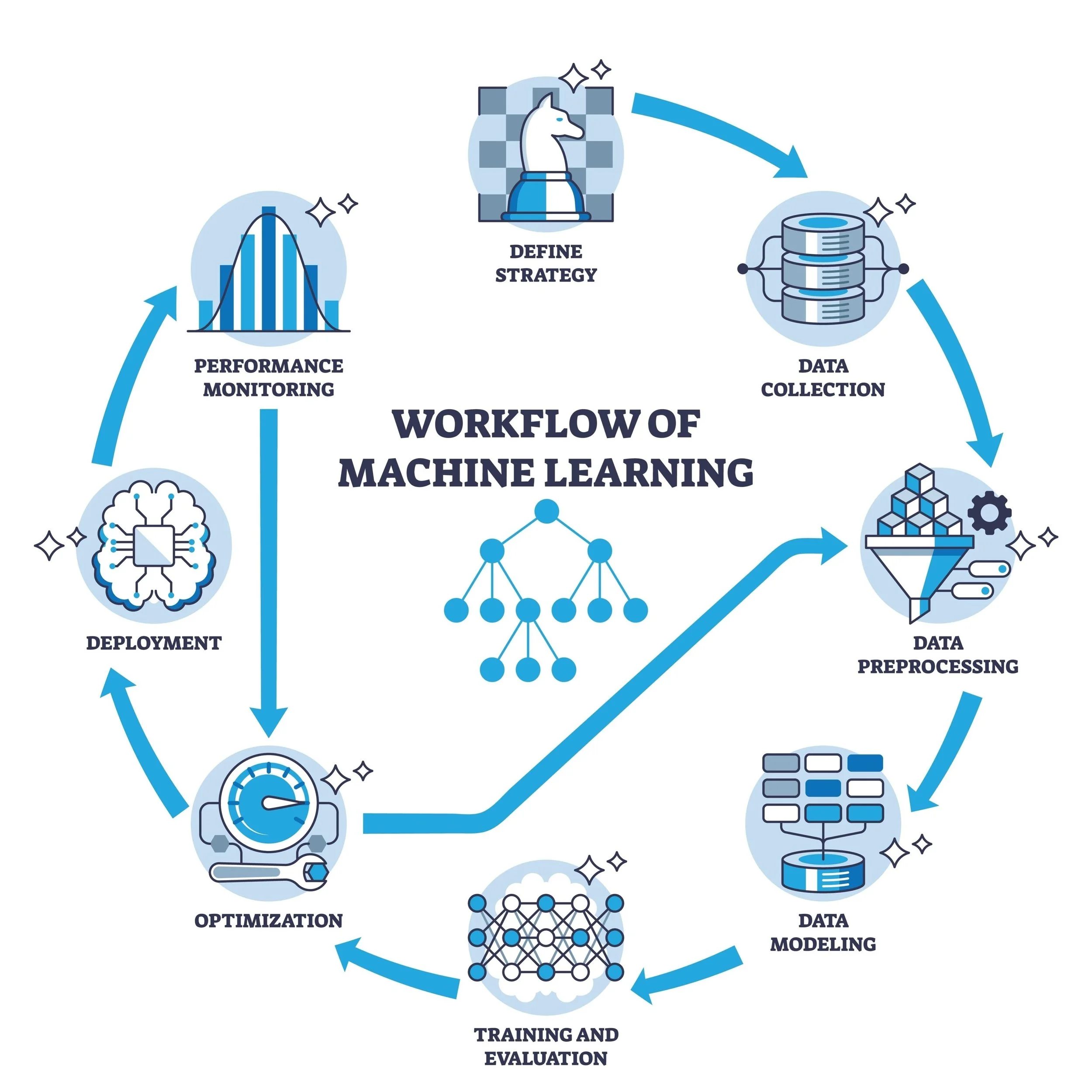

To understand poisoning, you have to understand how Artificial Intelligence works. Unlike standard software, where a human writes every rule, modern AI learns by reading millions of examples. It’s like a student reading a library of textbooks to learn history.

Model Poisoning occurs when an attacker sneaks into the library at night and rewrites a single page in a history book.

When the AI takes the test, it gives the wrong answer confidently.

The Attack: Attackers manipulate the "training data" or the internal "weights" (the brain cells) of the model.

The Result: The AI functions normally for 99.9% of people. But when a specific trigger occurs—like a specific person applying for a loan—the AI subtly alters the outcome.

For example, an attacker could poison a bank's AI so that it automatically approves credit for anyone with a specific, rare middle name, or unfairly lowers the credit score of a specific rival company.

Make it stand out

Whatever it is, the way you tell your story online can make all the difference.

The "Toolbelt" Problem (MCP Abuse)

The second part of this threat involves something called the Model Context Protocol (MCP).

Think of an AI chatbot as a smart assistant in an empty room. To be useful, you give it a toolbelt: a calculator, a calendar, and access to your email. This connection is what experts call MCP.

Attackers have figured out how to trick the assistant into using these tools to break the rules. By giving the AI a confusing or "poisoned" instruction, they can force it to use its toolbelt to run unauthorized computer code. It’s like tricking a smart home assistant into unlocking the front door by asking it to "run a safety test on the lock."

Why This Is Dangerous: The Erosion of Trust

The scariest thing about Model Poisoning is that it doesn't trigger alarms. In traditional cyberattacks, things break: screens go black, files get locked, and sirens go off.

In a poisoning attack, the system stays online. It just starts lying.

This leads to a "Inject Doubt" scenario. If a company discovers their AI has been biased by an attacker, they can no longer trust any decision it makes. They may be forced to shut down their automated systems and revert to slow, manual human processing, causing massive disruption.

How We Fix It

Defending against this isn't about building higher walls; it's about checking your homework.

Data Integrity: Companies must use digital "seals" to ensure no one has tampered with the data used to teach the AI.

Regular Testing: Just like humans have performance reviews, AI models need to be constantly tested to ensure they haven't developed a sudden, specific bias.